Cerebras WSE-3, the Fastest AI Chip to Date, is Released to Reduce AI Model Training Time

Semiconductor innovation company Cerebras announced the launch of the third-generation chip-level chip WSE-3, which doubles the performance of the previous generation WSE-2 chip based on the same power consumption.

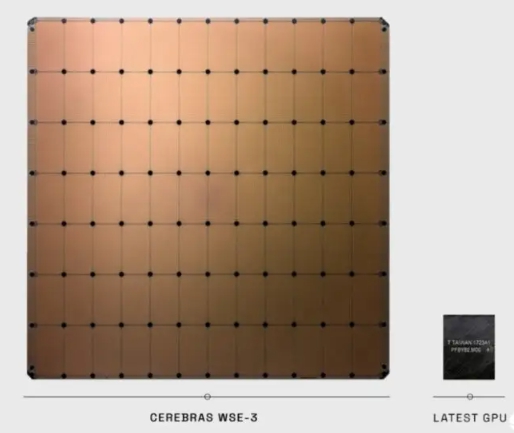

Cerebras pointed out that WSE-3 uses TSMC's 5nm technology, accommodates 400 million transistors on a single chip, and has 900,000 AI computing cores. 44GB temporary storage memory, which can be paired with 1.5TB/12TB/1.2PB external storage solutions, with a maximum AI computing power of 125PFLOPS.

WSE-3's CS-3 system, with up to 1.2PB of external memory, can train next-generation artificial intelligence models that are ten times larger than GPT-4 and Gemini. A single logical memory space can accommodate 24,000T parameter scale models, greatly simplifying the work of developers.

CS-3 is also suitable for ultra-large-scale AI computing needs. The four-system cluster architecture can fine-tune 70B models in one day. With a maximum scale of 2,048 CS-3 system clusters, the Llama 70B model can be trained in one day. It is also expected to be used in the "Vulture Galaxy 3" AI supercomputer currently under construction in the future.

The Cerebras CS-3 system has excellent ease of use. The large model training code is 97% smaller than that of the GPU. It only requires 565 lines of code to complete the GPT-3 model standard. The G42 consortium of the United Arab Emirates stated that it will build the Condor Galaxy 3 supercomputer composed of Cerebras CS-3, which contains 64 systems and can provide 8exaFLOP of AI computing power.